HPC2021 System

HPC2021 System

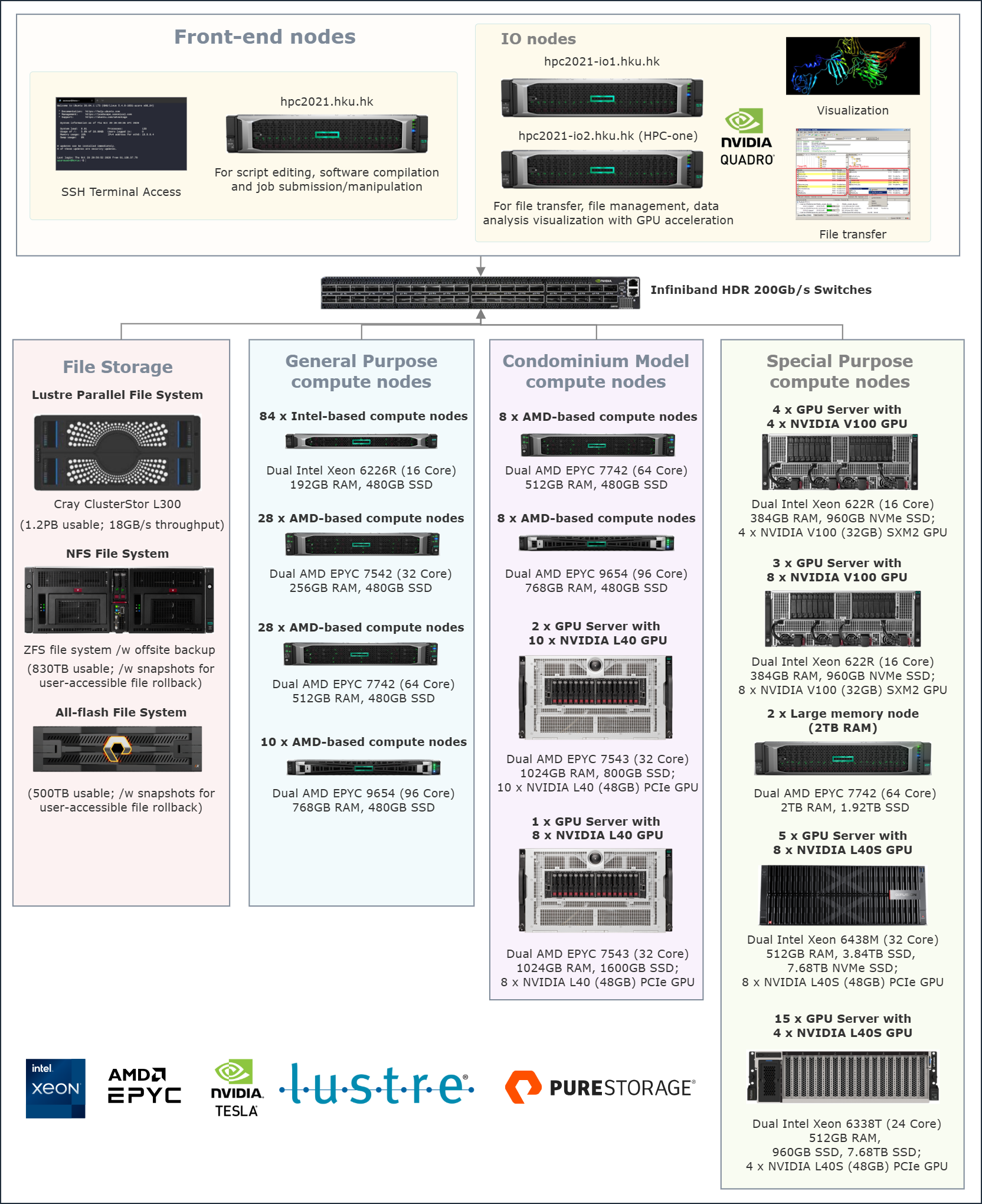

The HPC2021 system is running Rocky Linux 8 and SLURM workload manager for job scheduling. The cluster is composed of 150 general purpose(GP) compute nodes, 29 special purpose(SP) compute nodes, 19 condo nodes and 3 frontend nodes interconnected with 100Gb/s InfiniBand. Condo nodes are compute nodes hosted in HPC2021 but owned by individual PI/Department/Faculty, all users can access those nodes via job scheduler while the owners have the highest priority. Intensive I/O is supported by Lustre parallel file system (Cray ClusterStor), while home directories are serviced by NFS file system with global access. All inter-node communication (MPI/Lustre) is through a low-latency Mellanox High Data Rate (HDR) InfiniBand network.

User can access the cluster via the frontend nodes only:

- hpc2021.hku.hk, which is reserved for program modification, compilation and job queue submission/manipulation;

- hpc2021-io1.hku.hk and hpc2021-io2.hku.hk which is reserved for file transfer, file management and data analysis/visualization.

System Diagram

Login nodes

HPC2021 provides 3 login nodes for users to interact with the system.

| Host name | Configuration |

|---|---|

| hpc2021.hku.hk | 2 x Intel Xeon Gold 6226R (16 Core) CPU; 96GB RAM; 2 x 240G SSD; Dual 25GbE (Campus Network) |

| hpc2021-io1.hku.hk | 2 x Intel Xeon Gold 6226R (16 Core) CPU; 192GB RAM; 2 x 240G SSD; NVIDIA RTX6000 GPU (24GB GDDR6 ECC memory); Dual 25GbE (Campus Network) |

| hpc2021-io2.hku.hk | 2 x Intel Xeon Gold 6226R (16 Core) CPU; 192GB RAM; 2 x 240G SSD; NVIDIA RTX6000 GPU (24GB GDDR6 ECC memory); Dual 25GbE (Campus Network) |

Compute nodes

HPC2021 consists of 4 types of compute nodes accessible via job scheduler with a total of 198 nodes, 14,256 physical CPU Cores, 168 GPU cards and 74TB system memory.

| Node Type | Configuration | Quantity |

|---|---|---|

| General Purpose – Intel | Dual Intel Xeon Gold 6226R (16 Core) CPU; 192GB RAM; 480GB SSD | 84 |

| General Purpose – AMD | Dual AMD EPYC 7542 (32 Core) CPU; 256GB RAM; 480GB SSD | 28 |

| General Purpose – AMD | Dual AMD EPYC 7742 (64 Core) CPU; 512GB RAM; 480GB SSD | 28 |

| General Purpose – AMD | Dual AMD EPYC 9654 (96 Core) CPU; 768GB RAM; 480GB SSD | 10 |

| Special Purpose – Large RAM | Dual AMD EPYC 7742 (64 Core) CPU; 2TB RAM; 2 x 12TB SAS Hard Drive; 1.92TB SSD | 2 |

| Special Purpose – GPU | Dual Intel Xeon 6226R (16 Core) CPU; 384GB RAM; 4 x NVIDIA Tesla V100 32GB SXM2 GPU; 6 x 2TB SATA Hard Drive; 960GB NVMe SSD | 4 |

| Special Purpose – GPU | Dual Intel Xeon 6226R (16 Core) CPU; 384GB RAM; 8 x NVIDIA Tesla V100 32GB SXM2 GPU; 6 x 2TB SATA Hard Drive; 960GB NVMe SSD |

3 |

| Special Purpose – GPU | Dual Intel Xeon 6438M (32 Core) CPU; 512GB RAM; 8 x NVIDIA Tesla L40S 48GB PCIe GPU; 3.84TB SSD; 7.68TB NVMe SSD | 5 |

| Special Purpose – GPU | Dual Intel Xeon 6338T (24 Core) CPU; 512GB RAM; 4 x NVIDIA Tesla L40S 48GB PCIe GPU; 480GB SSD; 7.68TB SSD | 15 |

| Condominium Model – AMD | Dual AMD EPYC 7742 (64 Core) CPU; 512GB RAM; 480GB SSD | 8 |

| Condominium Model – AMD | Dual AMD EPYC 9654 (96 Core) CPU; 768GB RAM; 480GB SSD | 8 |

| Condominium Model – GPU | Dual AMD EPYC 7543 (32 Core) CPU; 1024GB RAM; 10 x NVIDIA Tesla L40 48GB PCIe GPU; 800GB SSD | 2 |

| Condominium Model – GPU | Dual AMD EPYC 7543 (32 Core) CPU; 1024GB RAM; 8 x NVIDIA Tesla L40 48GB PCIe GPU; 800GB SSD | 1 |

Data Storage

Three types of network storage are accessible on any nodes in HPC2021.

- A Lustre parallel file system provides high bandwidth file services for parallel workload.

- Network file system with ZFS underlying file system offers snapshot feature that protects against accidental or malicious loss of data.

- Network file system with Pure Storage underlying file system offers snapshot feature that protects against accidental or malicious loss of data.

| Storage Type | Configuration | Usable Capacity |

|---|---|---|

| Lustre parallel file system | Cray ClusterStor L300 | 1.2PB |

| Network file system | ZFS with snapshots | 830TB |

| Network file system | Pure Storage with snapshots | 500TB |

System Interconnect

- Connection to Campus network: 25GbE x 2

- Cluster Interconnect: 200Gb/s HDR Infiniband with 2-tiers fat-tree topology supporting rack-level full bandwidth and a 3:1 blocking cross-rack bandwidth